Midjourney, one of the most popular AI -Image generational companies, announced on Wednesday the to launch From its much-expected AI video-generation model, V1.

V1 is an image-to-video model, in which users can upload an image-or take an image generated from one of the other Midjourney-and V1 models will produce a set of four five-second videos based on it. Much like Midjourney’s image models, V1 is only available with disagreement, and it is only available on the web at launch.

The launch of V1 puts Midjourney in competition with AI -Video -Generation models from other companies, such as Openai’s Sora, Gen 4 of Runway, Adobe’s fireball, and Google’s VEO. While many companies focus on developing controllable AI model models for using business settings, Midjourney has always taken away their distinctive AI models from images.

The company says it has bigger goals for its AI Video models than generating B-Roll for Hollywood movies or commercials for the advertising industry. In a a Blog Post, Midjourney’s Director General David Holz says its video model AI is the next step of the company to its ultimate destination, creating AI models “capable of real-time open world simulations.”

After AI-Video models, Midjourney says it plans to develop AI models to produce 3D imaging, as well as real-time AI models.

The launch of the Midjourney V1 model comes just a week after the starting was processed by two of Hollywood’s most famous film studios: Disney and Universal. The costume claims that images created by Midjourney’s image models depict the studio’s copyright characters, such as Homer Simpson and Darth Vader.

Hollywood studios have struggled to face the growing popularity of AI images and video generation models, such as the midfields. There is a growing fear that these AIs could replace or disable the work of creatures in their respective fields, and several media companies have claimed that these products are trained on their copyrighted works.

While Midjourney tried to launch itself as different from other AIs and videos – more focused on creativity than immediate business applications – the starting cannot escape these allegations.

To get started, Midjourney says it will charge 8x more for video -generation than a typical image generation, meaning that subscribers will run out of their monthly registered generations significantly faster while creating videos than images.

At launch, the cheapest way to try V1 is by subscription to Midjourney’s $ 10-PO-monthly basic plan. Subscribers to Midjourney’s $ 60-month pro-plan and a 120-month megato plan of $ 120-month will have unlimited videos in the company’s slower, “relaxed” mode. Over the next month, Midjourney says it will re -value its price for video models.

V1 comes with some custom settings that allow users to control the results of the video model.

Users can choose an automatic animation setting to make an image move randomly, or they can choose a manual configuration that allows users to describe, in a text, a specific animation they want to add to their video. Users can also change the amount of camera and subject movement by choosing a “low movement” or “high movement” in settings.

While the videos generated with V1 lasts just five seconds, users can choose to extend them by four seconds to four times, meaning that V1 videos could reach as long as 21 seconds.

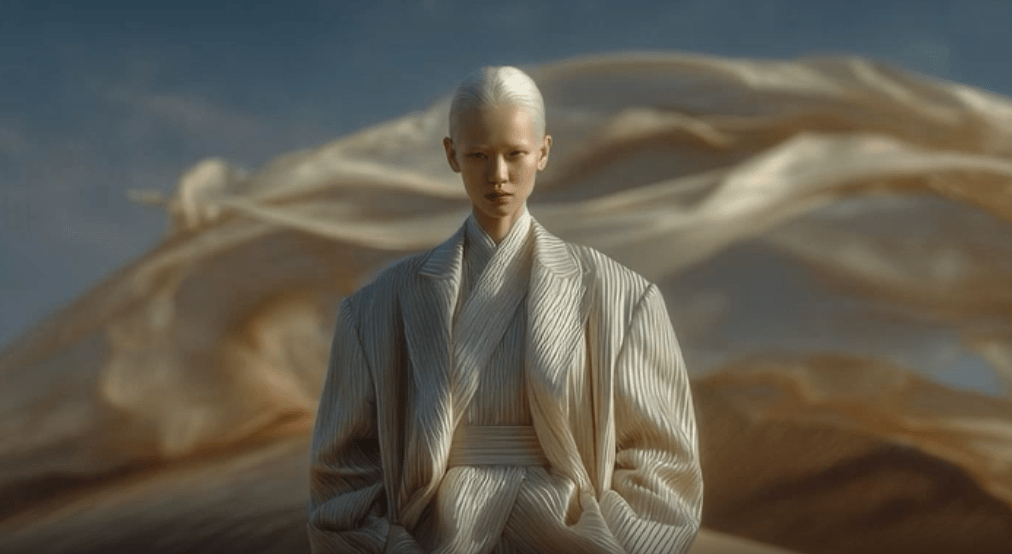

Similar to Midjourney’s AI models, early demos from V1 videos look a bit worldwide, instead of hyperrealistic. The initial response to V1 was positive, although it is still unclear how well it matches other major AI model models that have been on the market for months or even years.