Earlier this month, Adrian Holovaty, founder of a music instruction platform SoundsliceSolved a mystery that plagued him for weeks. Strange pictures about what was clearly Chatgpt meetings, were still uploaded to the site.

After he resolved it, he realized that Chatgpt became one of his company’s biggest Hype men – but it also lied to people about what his app could do.

Holovaty is best known as one of the creators of the open source Django projectPopular Python website development framework (though he retired from managing the project in 2014). In 2012, he launched Soundslice, which remains “proudly exclaimed,” he tells Techcrunch. Currently he focused on his musical career Both as an artist and as a founder.

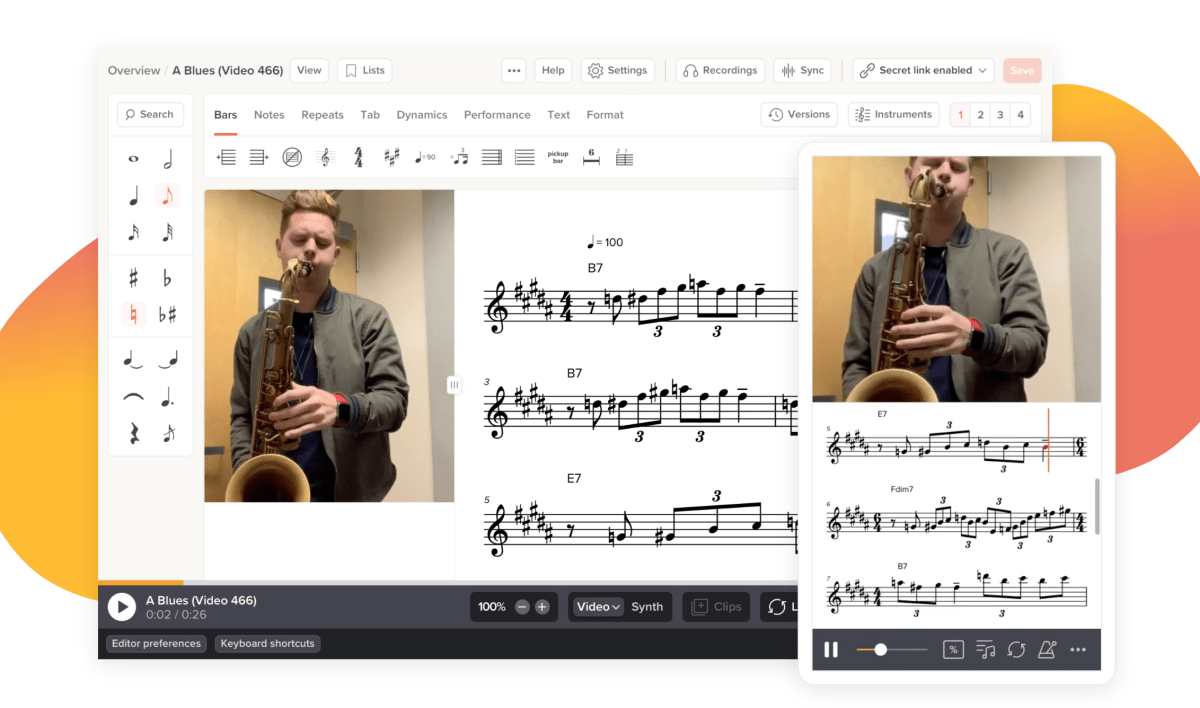

Soundslice is an app to teach music used by students and teachers. It is known for its video game synchronized to the musical notations that guide users about how the notes need to be played.

It also offers a function called “Sheet Music Scanner”, which allows users to upload a picture of paper sheet music and, using AI, will automatically transform this into an interactive sheet, completely with notations.

Holovaty looks carefully looking at the mistakes of this feature to see what problems occurring, where to add improvements, he said.

This is where he started seeing the uploaded Chatgpt meetings.

They created a set of erroneous logs. Instead of a score of a score, these were pictures of words and a box of symbols known as an ASCII tablet. This is a basic text-based system used for guitar notations, which uses a regular keyboard. (There is no very key key, for example, on your standard QWERTY keyboard.)

The volume of these chatgpt images was not so wave that it cost his company money to store them and crush the wide band from his app, Holovaty said. He was dismayed, he wrote In a blog -fixture about the situation.

“Our scanning system was not intended to support this style of notation. Why, then, we were bombarded with so many ASCII lands Chatgpt -displays? I have been mystified for weeks -until I pushed myself with Chatgpt.”

Thus he saw Chatgpt telling people that they can hear this music by opening a Soundslice account and uploading the image of the chat session. Only, they couldn’t. Uploading these images would not translate the ASCII tab into audio notes.

He was hit by a new problem. “The main cost was reputation: new Soundslice users went with a false expectation. They were confidently said that we will do something we actually won’t,” he described to Techcrunch.

He and his team discussed their options: SLAP Malaysters through the website about it – “No, we cannot transform Chatgpt -session into audible music” – or build that feature into the scanner, though he had never considered supporting this offensive musical notation before.

He chose to build the function.

“My feelings about this are conflicted. I’m happy to add a tool that helps people. But I feel our hand was forced in a strange way. Shall we really develop functions in response to misinformation?” He wrote.

He also wondered if this was the first documented case of a company having to work out a function as Chatgpt continued to repeat, to many people, his hallucination about it.

Hacker News’ joint developers had Interesting to take About it: several of them said it is no different than an excessive human seller promising the world to prospects and then forces developers to deliver new features.

“I think that’s a very appropriate and fun comparison!” Holovaty agreed.